Welcome to my home page. I am Associate Professor at the Interactive Intelligence group at TU Delft. Additionally, you might be interested in the following:

- I am one of the scientific directors of the Mercury Machine Learning Lab.

- I am director and one of the co-founders of the ELLIS Delft Unit, as well as an ELLIS Scholar.

- I am co-organizing the COMARL Seminar series.

- I am a senior member of AAAI.

- I am a board member of IFAAMAS.

- I am associate editor for JAIR and AIJ.

- Find me on Mastodon or twitter.

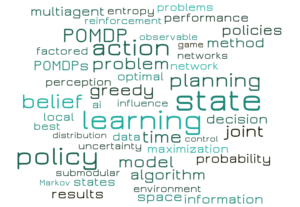

My main research interests lie in what I call interactive learning and decision making: the intersection of AI, machine learning and game theory that focuses on an intelligent agent that interacts with a complex world. My long term vision is the construction of a collaborative AI scientist. In the short term, I try to generate fundamental knowledge about algorithms and models for learning complex tasks. Specifically, I believe that agents need models to support intelligent decision making. Learning such models is difficult, and given that our world constantly changes, we cannot assume that agents will ever learn perfect models. Instead, we need to endow them with the capability to learn these models online, i.e., while interacting with their environments: they need to be able to use imperfect models, reason about the uncertainty in their predictions, and actively learn to improve these models (balancing task rewards and knowledge gathering). In addition, I think about how such abstract models might be applied to a variety of real-world tasks such as collaboration in multi-robot or human-AI systems, optimization of traffic control systems, intelligent e-commerce agents, etc.

My main research interests lie in what I call interactive learning and decision making: the intersection of AI, machine learning and game theory that focuses on an intelligent agent that interacts with a complex world. My long term vision is the construction of a collaborative AI scientist. In the short term, I try to generate fundamental knowledge about algorithms and models for learning complex tasks. Specifically, I believe that agents need models to support intelligent decision making. Learning such models is difficult, and given that our world constantly changes, we cannot assume that agents will ever learn perfect models. Instead, we need to endow them with the capability to learn these models online, i.e., while interacting with their environments: they need to be able to use imperfect models, reason about the uncertainty in their predictions, and actively learn to improve these models (balancing task rewards and knowledge gathering). In addition, I think about how such abstract models might be applied to a variety of real-world tasks such as collaboration in multi-robot or human-AI systems, optimization of traffic control systems, intelligent e-commerce agents, etc.

For more information about my research, look here.

For research opportunities, look here.

For more information about possible student projects (current TU Delft students), look here.

News

-

August 28th, 2024: Outstanding Paper Award on Scientific Understanding @ RLC 2024

Very proud of my (graduated) student Miguel. His persistence made the difference for this final part of his PhD thesis!

-

June 5th, 2024: Looking for PhD student

With Julia Olkhovskaia as the main supervisor, I am looking for a (fully paid) PhD student.

See details on my vacancy page.

-

February 20th, 2023: Wanted: assist./assoc. professor in causal reinforcement learning

At TU Delft, we are recruiting an assist/assoc. professor in causal reinforcement learning.

More info here.

-

December 2nd, 2022: Comments for Volkskrant

For the Volkskrant, I commented on the Stratego article in science. Read it here.

-

September 24th, 2022: Berkeley MARL Seminar talk online

The talk that I gave for the Berkeley MARL seminar can now be seen on youtube.

It gives an introduction to ideas of influence-based abstraction, focusing also on the inspirations from multiagent planning, as well as implications for future MARL.

-

September 15th, 2022: DIALS accepted to NeurIPS’22

Our paper Distributed Influence-Augmented Local Simulators for Parallel MARL in Large Networked Systems was accepted to NeurIPS! It shows how influence-based abstraction can be used to parallelize and thus speed up multiagent reinforcement learning, while stabilizing the learning at the same time.

-

June 30th, 2022: 2 ILDM papers to appear at ICML

Our group will be presenting 2 papers at ICML’22:

On the Impossibility of Learning to Cooperate with Adaptive Partner Strategies in Repeated Games

Come find us at ICML, or reach out over email!

-

April 20th, 2022: ILDM@AAMAS

There are 4 ILDM papers that will be presented at the main AAMAS conference. Here is the schedule in CEST:

MORAL: Aligning AI with Human Norms through Multi-Objective Reinforced Active Learning

Markus Peschl, Arkady Zgonnikov, Frans Oliehoek and Luciano Siebert

1A2-2 – CEST (UTC +2) Wed, 11 May 2022 18:00

2C5-1 – CEST (UTC +2) Thu, 12 May 2022 09:00Best-Response Bayesian Reinforcement Learning with BA-POMDPs for Centaurs

Mustafa Mert Çelikok, Frans A. Oliehoek and Samuel Kaski

2C2-2 – CEST (UTC +2) Thu, 12 May 2022 10:00

2A4-3 – CEST (UTC +2) Thu, 12 May 2022 20:00LEARN BADDr: Bayes-Adaptive Deep Dropout RL for POMDPs

Sammie Katt, Hai Nguyen, Frans Oliehoek and Christopher Amato

1A2-2 – CEST (UTC +2) Wed, 11 May 2022 18:00

3B3-2 – CEST (UTC +2) Fri, 13 May 2022 03:00Miguel Suau, Jinke He, Matthijs Spaan and Frans Oliehoek

Speeding up Deep Reinforcement Learning through Influence-Augmented Local Simulators

Poster session PDC2 – CEST (UTC +2) Thu, 12 May 2022 12:00Poincaré-Bendixson Limit Sets in Multi-Agent Learning (Best paper runner-up)

Aleksander Czechowski and Georgios Piliouras

1A4-1 11th May 5pm CEST

3C1-1 13th May 9am CEST -

April 20th, 2022: Senior Member AAAI

I was elected as a senior member of the association for the advancement of artificial intelligence (AAAI). The senior member status recognizes “AAAI members who have achieved significant accomplishments within the field of artificial intelligence”. I thank my nominators and the committee for this great honor.

-

March 2nd, 2022: First place ILDM team in RangL Pathways to Net Zero challenge

Aleksander Czechowski and Jinke He are one of the winning teams (‘Epsilon-greedy’) of The RangL Pathways to Net Zero challenge!

The challenge was to find the optimal pathway to a carbon neutral 2050. ‘RangL’ is a competition platform created at The Alan Turing Institute as a new model of collaboration between academia and industry. RangL offers an AI competition environment for practitioners to apply classical and machine learning techniques and expert knowledge to data-driven control problems.

More information: https://rangl.org/blog/ and https://github.com/rangl-labs/netzerotc.

Old news

- August 2017 Great news: I have won an ERC Starting Grant!

- August 2017 Our article on active perception is published in Autonomous Robots.

- May 2017 ICML accepted our paper ‘Learning in POMDPs with Monte Carlo Tree Search’

- May 2017 EPSRC will fund my ‘first grant’ proposal. I will be looking for a postdoc with interests in multiagent systems, deep/reinforcement learning.

- April 2017 a 1000 citations – yay! 😉

- April 2017 Our AAMAS paper is nominated for best paper!

- Feb 2017 Daniel Claes has been doing awesome work getting our Warehouse Commisioning planning methods to work on real robots:

- Feb 2017 MADP Toolbox 0.4.1 released!

- Feb 2017 AAMAS accepted our paper on Multi-Robot Warehouse Commisioning, yay!

- Oct 2016 My student Elise’s thesis on traffic light control via deep reinforcement learning will be presented at BNAIC. We also have a video that nicely illustrates the behavior of our novel deep Q-learning+ transfer planning approach. (also described in this demo paper). A NIPS workshop paper is under submission, let me know if you are interested.

- Aug 2016 I am co-organising the NIPS workshop on Learning, Inference and Control of Multi-Agent Systems taking place Friday 9th December 2016, Barcelona, Spain. Join us!

- Jul 2016 The book on Dec-POMDPs I wrote with Chris Amato got published! Check it out here or at Springer.

- Apr 2016 Our paper on PAC Greedy Maximisation with Efficient Bounds on Information Gain for Sensor Selection is accepted at IJCAI. I will also present the poster at AAMAS in Singapore.

- Dec 2015 AAAI ’16 has accepted 4 of my papers and they are now on my publication page.

- Sept 2015 I will give an invited talk at the NIPS 2015 Workshop on Learning, Inference and Control of Multi-Agent Systems, Montreal

- July 2015 I am coorganizing the AAAI spring symposium on Challenges and Opportunities in Multiagent Learning for the Real World. While multiagent learning has been an active area of research for the last two decades, many challenges (such as uncertainty, partial observability, communication limitations, etc.) remain to be solved…!

- June 2015 I am coorganizing the Sequential Decision Making for Intelligent Agents AAAI fall symposium. We aim to bring together researchers that work on formal decision making methods (MDPs, POMDPs, Dec-POMDPs, I-POMDPs, etc.)

- April 2015 MADP v0.3.1 released!

- April 2015 Yay – IJCAI has accepted three of my papers!

- February 2015 Our article Computing Convex Coverage Sets for Faster Multi-Objective Coordination was accepted for publication in the Journal of AI Research.

- Januari 2015 Our work on computing upper bounds for factored Dec-POMDPs will appear at AAMAS as an extended abstract. Check out the extended version.

- Januari 2015 AAMAS has accepted our work on spatial task allocations problems (SPATAPS), see here.

- November 2014 AAAI has accepted two of my papers! One is about exploiting factored value functions in POMCP. The other investigates the use of sub-modularity in the full sequential POMDP setting.

- October 2014 A recent development in Dec-POMDP town is that these beasts can be reduced to a special case of (centralized) POMDPs. Chris and I decided it was time to try and give a quick overview of this approach in this technical report.

- July 2014 I started as Lecturer at the University of Liverpool.

- May 2014 It’s been a while, but we are releasing a new version of the MADP Toolbox ! Download it now: here. We are interested in your feedback, so let us know if you have problems or suggestions.

- April 2014 Chris Amato and I are doing interesting things on Bayesian reinforcement learning for MASs under state uncertainty. See our MSDM paper and technical report for more information.

- December 2013 Our paper Bounded Approximations for Linear Multi-Objective Planning under Uncertainty was accepted for publication at ICAPS.

- December 2013 Good news from AAMAS: A POMDP Based Approach to Optimally Select Sellers in Electronic Marketplaces and Linear Support for Multi-Objective Coordination Graphs both got accepted as full papers!

- October 2013 Our paper Effective Approximations for Spatial Task Allocation Problems was runner up for best paper at BNAIC.

- July 2013 I have been awarded a Veni grant for three years of research!

- June 2013 Presentations of AAMAS and an invited talk at CWI can now be found under Research highlights.

- April 2013 My paper Sufficient Plan-Time Statistics for Decentralized POMDPs is accepted at IJCAI 2013!

- April 2013 Christopher Amato and I are working on Bayesian RL for multiagent systems under uncertainty. See our paper here.

- February 2013 JAIR has accepted our article Incremental Clustering and Expansion for Faster Optimal Planning in Decentralized POMDPs for publication!

- December 2012 Our paper Approximate Solutions for Factored Dec-POMDPs with Many Agents is accepted as a full paper at AAMAS 2013!

- December 2012 Our paper Multi-Objective Variable Elimination for Collaborative Graphical Games is accepted as an extended abstract at AAMAS 2013.

- June 2012 Our paper Exploiting Structure in Cooperative Bayesian Games was accepted at UAI 2012!

- June 2012 Yay! I won the best PC member award at AAMAS 2012!

- June 2012 The 7th MSDM workshop was very interesting! Check the proceedings at the MSDM website.

- April 2012 Two of my papers will appear at AAAI!

- January 2012 My book chapter on decentralized POMDPs is finally published! It can be used as a introduction to Dec-POMDPs and also provides insight on how the current state-of-the-art algorithms (forward heuristic search and backward dynamic programming) for finite-horizon Dec-POMDPs relate to each other.

- December 2011 Two of my papers will appear at AAMAS: a full paper about heuristic search of multiagent influence and an extended abstract about effective method for performing the vector-based backup in settings with delayed communication.

- July 2010 I’ve started at MIT on July 15th!

- Look my thesis is on google books!

- February 2010 On February 12th 2010 I successfully defended my thesis, titled “Value-Based Planning for Teams of Agents in Stochastic Partially Observable Environments”.

- Prashant Doshi and his students Christopher Jackson and Kennth Bogert used the multiagent decision project (MADP) toolbox to compute policies in the game of StarCraft. Watch the (pretty cool) video.